This is a draft of an appendix chapter for my Napkin project.

In the world of olympiad math, there’s a famous functional equation that goes as follows:

Everyone knows what its solutions are! There’s an obvious family of solutions . Then there’s also this family of… uh… noncontinuous solutions (mumble grumble) pathological (mumble mumble) Axiom of Choice (grumble).

There’s also this thing called Zorn’s Lemma. It sounds terrifying, because it’s equivalent to the Axiom of Choice, which is also terrifying because why not.

In this post I will try to de-terrify these things, because they’re really not terrifying and I’m not sure why no one bothered to explain this properly yet. I have yet to see an olympiad handout that explains how you would construct a pathological solution, even though it’s really quite natural. So let me fix this problem now…

1.Let’s Construct a Monster

Let’s try to construct a “bad” function and see what happens.

By scaling, let’s assume WLOG that . Thus

for every integer

, and you can easily show from here that

So is determined for all rationals. And then you get stuck.

None of this is useful for determining, say, . You could add and subtract rational numbers all day and, say,

isn’t going to show up at all.

Well, we’re trying to set things on fire anyways, so let’s set

because why not? By the same induction, we get , and then that

Here and

are rationals. Well, so far so good — as written, this is a perfectly good solution, other than the fact that we’ve only defined

on a tiny portion of the real numbers.

Well, we can do this all day:

Perfectly consistent.

You can kind of see how we should keep going now. Just keep throwing in new real numbers which are “independent” to the previous few, assigning them to whatever junk we want. It feels like it should be workable. . .

In a moment I’ll explain what “independent” means (though you might be able to guess already), but at the moment there’s a bigger issue: no matter how many numbers we throw, it seems like we’ll never finish. Let’s address the second issue first.

2. Review of Finite Induction

When you do induction, you get to count off ,

,

, … and so on. So for example, suppose we had a “problem” such as the following: Prove that the intersection of

open intervals is either

or an open interval. You can do this by induction easily: it’s true for

, and for the larger cases it’s similarly easy.

But you can’t conclude from this that infinitely many open intervals intersect at some open interval. Indeed, this is false: consider the intervals

This infinite set of intervals intersects at a single point !

The moral of the story is that induction doesn’t let us reach infinity. Too bad, because we’d have loved to use induction to help us construct a monster. That’s what we’re doing, after all — adding things in one by one.

3. Transfinite Induction

Well, it turns out we can, but we need a new notion of number.

For this we need a notion of an ordinal number. I defined these in their full glory a previous blog post, but I don’t need the full details of that. Here’s what I want to say: after all the natural numbers

I’ll put a new number called , representing how large the natural numbers are. After that there’s a number called

and eventually a number called .

The list goes on:

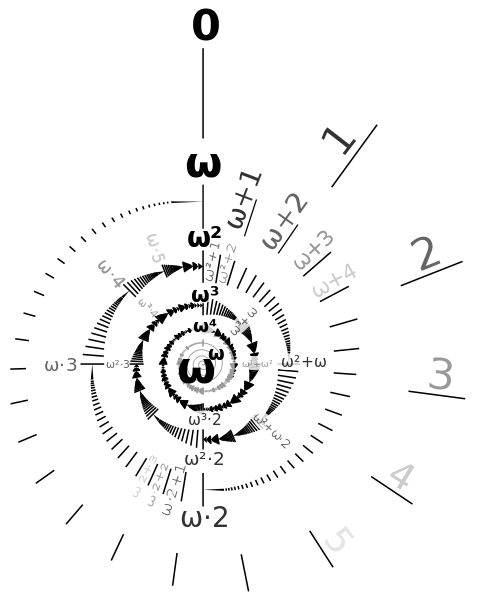

Pictorially, it kind of looks like this:

Anyways, in the same way that natural numbers dominate all finite sets, the ordinals dominate all the sets.

Theorem 1 For every set

there’s some ordinal

which is bigger than it.

But it turns out (and you can intuitively see) that as large as the ordinals grow, there is no infinite descending chain. Meaning: if I start at an ordinal (like ) and jump down, I can only take finitely many jumps before I hit

. (To see this, try writing down a chain starting at

yourself.) Hence, induction and recursion still work verbatim:

Theorem 2 Given a statement

, suppose that

is true, and

- If

is true for all

, then

is true.

Then

is true.

Similarly, you’re allowed to do recursion to define if you know the value of

for all

.

The difference from normal induction or recursion is that we’ll often only do things like “define ”. But this is not enough to define

for all

. To see this, try using our normal induction and see how far we can climb up the ladder.

Answer: you can’t get ! It’s not of the form

for any of our natural numbers

— our finite induction only lets us get up to the ordinals less than

. Similarly, the simple

doesn’t let us hit the ordinal

, even if we already have

for all

. Such ordinals are called limit ordinals. The ordinal that are of the form

are called successor ordinals.

So a transfinite induction or recursion is very often broken up into three cases. In the induction phrasing, it looks like

- (Zero Case) First, resolve

.

- (Successor Case) Show that from

we can get

.

- (Limit Case) Show that

holds given

for all

, where

is a limit ordinal.

Similarly, transfinite recursion often is split into cases too.

- (Zero Case) First, define

.

- (Successor Case) Define

from

.

- (Limit Case) Define

from

for all

, where

is a limit ordinal.

In both situations, finite induction only does the first two cases, but if we’re able to do the third case we can climb far above the barrier .

4. Wrapping Up Functional Equations

Let’s return to solving our problem.

Let denote the set of “base” numbers we have at the

the step. In our example, we might have

and we’d like to keep building up until we can express all real numbers. For completeness, let me declare

.

First, I need to be more precise about “independent”. Intuitively, this construction is working because

is never going to equal zero for rational numbers ,

,

,

(other than all zeros). In general, a set

of numbers is “independent” if the combination

never occurs for rational numbers unless

. Here

are distinct. Note that even if

is infinite, I can only take finite sums! (This notion has a name: we want

to be linearly independent over

.)

When do we stop? We’d like to stop when we have a set that’s so big, every real number can be written in terms of the independent numbers. (This notion also has a name: it’s called a

-basis.) Let’s call such a set spanning; we stop once we hit a spanning set.

The idea that we can induct still seems okay: suppose isn’t spanning. Then there’s some number that is independent of

, say

or something. Then we just add it to get

. And we keep going.

Unfortunately, as I said before it’s not enough to be able to go from to

(successor case); we need to handle the limit case as well. But it turns out there’s a trick we can do. Suppose we’ve constructed all the sets

,

,

, …, one for each positive integer

, and none of them are spanning. The next thing I want to construct is

; somehow I have to “jump”. To do this, I now take the infinite union

The elements of this set are also independent (why?).

Ta-da! With the simple trick of “union all the existing sets”, we’ve just jumped the hurdle to the first limit ordinal . Then we can construct

,

, …, and for the next limit we just do the same trick of “union-ing” all the previous sets.

So we can formalize the process as follows:

- Let

.

- For a successor stage

, add any element to

to obtain

.

- For a limit stage

, take the union

.

How do we know that we’ll stop eventually? Well, the thing is that this process consumes a lot of real numbers. In particular, the ordinals get larger than the size of . Hence if we don’t stop we will quite literally reach a point where we have used up every single real number. Clearly that’s impossible, because by then the elements can’t possibly be independent! (EDIT Dec 20 2015: To be clear, the claim that “ordinals get larger than the size of the reals” requires the Axiom of Choice; one can’t do this construction using transfinite induction alone. Thanks reddit for calling me out on this.)

So by transfinite recursion (and Choice), we eventually hit some which is spanning: the elements are all independent, but every real number can be expressed using it. Done! This set has a name: a Hamel basis.

Thus this problem is dead, dead, dead. Any questions?

5. Zorn’s Lemma

Now I can tell you what Zorn’s Lemma is: it lets us do the same thing in any poset.

We can think of the above example as follows: consider all sets of independent elements. These form a partially ordered set by inclusion, and what we did was quite literally climb up a chain

It’s not quite climbing since we weren’t just going one step at a time: we had to do “jumps” to get up to and resume climbing. But the main idea is to climb up a poset until we’re at the very top; in the previous case, when we reached the spanning set.

The same thing works verbatim with any partially ordered set . Let’s define some terminology. A local maximum (or maximal element) of the entire poset

is an element which has no other elements strictly greater than it.

Now a chain of length is a set of elements

for every

such that

. (Observe that a chain has a last element if and only if

is a successor ordinal, like

.) An upper bound to a chain is an element

which is greater than or equal to all elements of the chain. In particular, if

is a successor ordinal, then just taking the last element of the chain works.

In this language, Zorn’s Lemma states that

Theorem 3 (Zorn’s Lemma) Let

be a nonempty partially ordered set. If every chain has an upper bound, then

has a local maximum.

Chains with length equal to a successor ordinal always have upper bounds, but this is not true in the limit case. So the hypothesis of Zorn’s Lemma is exactly what lets us “jump” up to define and other limit ordinals. And the proof of Zorn’s Lemma is straightforward: keep climbing up the poset at successor stages, using Zorn’s condition to jump up at limit stages, and thus building a really long chain. But we have to eventually stop, or we literally run out of elements of

. And the only possible stopping point is a local maximum.

If we want to phrase our previous solution in terms of Zorn’s Lemma, we’d say: Proof: Look at the poset whose elements are sets of independent real numbers. Every chain has an upper bound

(which you have to check is actually an element of the poset). Thus by Zorn, there is a local maximum

. Then

must be spanning, because otherwise we could add an element to it.

So really, Zorn’s Lemma is encoding all of the work of climbing that I argued earlier. It’s a neat little package that captures all the boilerplate, and tells you exactly what you need to check.

One last thing you might ask: where is the Axiom of Choice used? Well, the idea is that for any chain there could be lots of ‘s, and you need to pick one of them. Since you are making arbitrary choices infinitely many times, you need the Axiom of Choice. But really, it’s nothing special. [EDIT: AM points out that in order to talk about cardinalities in Theorem 1, one also needs the Axiom of Choice.]

6. Conclusion

In the words of Timothy Gowers,

If you are building a mathematical object in stages and find that (i) you have not finished even after infinitely many stages, and (ii) there seems to be nothing to stop you continuing to build, then Zorn’s lemma may well be able to help you.

Really, there’s nothing tricky at all here. People seem scared of Zorn’s Lemma, and claim it’s not intuitive or something. But really, all we’re doing is climbing up a poset. Nothing tricky at all.

Hi, thank a lot for this post. I am not a mathematician but an economist (phd). I do not get why the Zorn’s lemma works only on partial ordered set and not any posets. I will really appreciate your help in explaining why the antisymmetry is required and if there is a way around it.

LikeLike

Perhaps we’re not agreeing on terms: the way I learned it, “poset” is an abbreviation for “partially ordered set” :) More to the point, what do you mean by “antisymmetry”?

LikeLike

Actually, perhaps by antisymmetry you mean

The reason you need this condition is to guarantee that as you climb up the poset, all the elements in your chain are distinct. Otherwise you could just be going in circles:

and the argument that you’d eventually run out of elements in doesn’t hold!

doesn’t hold!

I’m not sure if there’s a way around this, because I think if you have a relation that does not satisfy antisymmetry, a poset isn’t the right way to think about it — put another way, I can’t think of any relation without this property where I would still want to think about a “maximal” element. But if you have a specific example in mind, I would love to hear it! :)

LikeLike

Hi, thanks a lot for the replay you got my question perefectly.

The example:

Let D be a acylcic binary realtion on a set X, and let P be the set of all reflxive and transtive realtions on X. The beinary relation M on P is defined by the rule (≽,≽′)∈M if for all x,y:(x,y)∈D, x≽′y implies x≽y. M is reflxive and transtive on P but is not antisymatric. For example: for X={x,y,z}, D={(x,y)}, ≽={(x,y)}, and ≽’={(x,y),(x,z)}, we have (≽,≽′)∈M and (≽′,≽)∈M but ≽≠≽′.

As for your argument about no running out of elments, if a=b then you simply cut this chain (and any other chain with two sequantial indentical elments), right? but then can’t we cut, in the same way, any sequantial pairs (≽,≽′)∈M and (≽′,≽)∈M ?

If you have time I can be more presice about this last idea.

Thanks a gain,

Guy

LikeLike

Sorry for writing too much but I was to eager to answer earlier that I immediately gave my example instead of a much simpler one. Let ≽ on ℝ² defined by the rule A≽B if a₁⋅a₂≥b₁⋅b₂. While ≽ is not antisymmetric it has a natural meaning of ≽- maximal element.

LikeLike

OK, I see. :)

You do *need* the antisymmetry condition; for example, without it the ordering on a two-element set

on a two-element set  with

with  and

and  is a counterexample. This poset has no “maximal elements” yet every element is an “upper bound” to any chain!

is a counterexample. This poset has no “maximal elements” yet every element is an “upper bound” to any chain!

I’ll have to think about what new hypotheses you’d have to add in order to get this to work.

LikeLike

When I was taking this course, a reflexive and transitive relation was called a preorder.

The thing is that a preorder generates a partial order, by taking the quotient by the equivalence relation $a\leq b$ and $b\leq a$, so you can apply Zorn lemma to the induced partial order and get:

If a preordered set has an upper bound for every chain, then it has a “maximal” element, where “maximal” element $a$ means that $a\leq b$ imply $b\leq a$.

LikeLike

It seems that this construction will fail to yield a non-linear additive map from to

to  if you pick specific values for the coefficients of the terms that make up

if you pick specific values for the coefficients of the terms that make up  , no?

, no?

To explain what I mean, if we set , we must have that

, we must have that  , which means that

, which means that  is linear, at least for numbers of the form

is linear, at least for numbers of the form  . Thus we must add the requirement that

. Thus we must add the requirement that  , no?

, no?

LikeLiked by 1 person

Yep, you’re right! In other words, this construction will yield the linear functions as well as the non-linear ones.

LikeLiked by 1 person

I guess I am not getting a simple fact about ordinals here:

If I start at and jump down, why will I reach

and jump down, why will I reach  in a finite number of steps?

in a finite number of steps?  has no immediate predecessor.

has no immediate predecessor.

LikeLiked by 1 person

By jump down from I mean a decreasing chain

I mean a decreasing chain  . So in your case $\latex \alpha_1$ has to be finite, since

. So in your case $\latex \alpha_1$ has to be finite, since  has no immediate predecessor.

has no immediate predecessor.

LikeLiked by 1 person

The link that says it goes to this post on your Top Posts page instead takes me to a WordPress log-in screen.

LikeLike

Thanks, should be fixed now.

LikeLike

Hi Evan, thanks for the post. I don’t get how the Axiom of Choice is used in the transfinite induction. Why do you need it in Theorem 1 and to show that the ordinals eventually exceed the size of the reals?

LikeLike